Developing AI Features for Duo Self-Hosted

This document outlines the process for developing AI features for GitLab Duo Self-Hosted. Developing AI features for GitLab Duo Self-Hosted is quite similar to developing AI features for Duo SaaS, but there are some differences.

Gaining access to a hosted model

The following models are currently available to GitLab team members for development purposes as of July, 2025:

-

Claude Sonnet 3.5on AWS Bedrock -

Claude Sonnet 3.5 v2on AWS Bedrock -

Claude Sonnet 3.7on AWS Bedrock -

Claude Sonnet 4on AWS Bedrock -

Claude Haiku 3.5on AWS Bedrock -

Llama 3.3 70bon AWS Bedrock -

Llama 3.1 8bon AWS Bedrock -

Llama 3.1 70bon AWS Bedrock -

Mistral Smallon FireworksAI -

Mixtral 8x22bon FireworksAI -

Codestral 22b v0.1on FireworksAI -

Llama 3.1 70bon FireworksAI -

Llama 3.1 8bon FireworksAI -

Llama 3.3 70bon FireworksAI

Development environments provide access to a limited set of models for cost optimization. The complete model catalog is available in production deployments.

Gaining access to models on FireworksAI

To gain access to FireworksAI, first create an Access Request. See this example access request if you aren't sure what information to fill in.

Our FireworksAI account is managed by Create::Code Creation. Once access is granted, navigate to https://fireworks.ai/ to create an API key.

Gaining access to models on AWS Bedrock

To gain access to models in AWS Bedrock, create an access request using the aws_services_account_iam_update template. See this example access request if you aren't sure what information to fill in.

Once your access request is approved, you can gain access to AWS credentials by visiting https://gitlabsandbox.cloud/login.

After logging into gitlabsandbox.cloud, perform the following steps:

- Select the

cstm-mdls-dev-bedrockAWS account. - On the top right corner of the page, select View IAM Credentials.

- In the model that opens up, you should be able to see

AWS Console URL,UsernameandPassword. Visit this AWS Console URL and input the obtained username and password to sign in.

On AWS Bedrock, you must gain access to the models you want to use. To do this:

- Visit the AWS Model Catalog Console.

- Make sure your location is set to

us-east-1. - From the list of models, find the model you want to use, and hover on the Available to request link. Then select Request access.

- Complete the form to request access to the model.

Your access should be granted within a few minutes.

Generating Access Key and Secret Key on AWS

To use AWS Bedrock models, you must generate access keys. To generate these access keys:

- Visit the IAM Console.

- Select the Users tab.

- Select your username.

- Select the Security credentials tab.

- Select Create access key.

- Select Download .csv to download the access keys.

Keep the access keys in a secure location. You will need them to configure the model.

Alternatively, to generate access keys on AWS, you can follow this video on how to create access and secret keys in AWS.

Setting up your GDK environment

GitLab Duo Self-Hosted requires that your GDK environment runs on Self-Managed mode. It does not work in Multi-Tenant/SaaS mode.

To set up your GDK environment to run the GitLab Duo Self-Hosted, follow the steps in this AI development documentation, under GitLab Self-Managed / Dedicated mode.

Setting up Environment Variables

To use the hosted models, set the following environment variables on your AI gateway:

-

In the

GDK_ROOT/gitlab-ai-gateway/.envfile, set the following variables:AWS_ACCESS_KEY_ID=your-access-key-id AWS_SECRET_ACCESS_KEY=your-secret-access-key AWS_REGION=us-east-1 FIREWORKS_AI_API_KEY=your-fireworks-api-key AIGW_CUSTOM_MODELS__ENABLED=true # useful for debugging AIGW_LOGGING__ENABLE_REQUEST_LOGGING=true AIGW_LOGGING__ENABLE_LITELLM_LOGGING=true -

In the

GDK_ROOT/env.runitfile, set the following variables:export GITLAB_SIMULATE_SAAS=0 -

Seed your Duo self-hosted models using

bundle exec rake gitlab:duo:seed_self_hosted_models. -

Running

bundle exec rake gitlab:duo:list_self_hosted_modelsshould output the list of created models -

Restart your GDK for the changes to take effect using

gdk restart.

Configuring the custom model in the UI

To enable the use of self-hosted models in the GitLab instance, follow these steps:

-

On your GDK instance, go to

/admin/gitlab_duo/configuration. -

Select the Use beta models and features in GitLab Duo Self-Hosted checkbox.

-

For Local AI Gateway URL, enter the URL of your AI gateway instance. In most cases, this will be

http://localhost:5052. -

Save the changes.

-

To save your changes, select Create self-hosted model.

- Save your changes by clicking on the

Create self-hosted modelbutton.

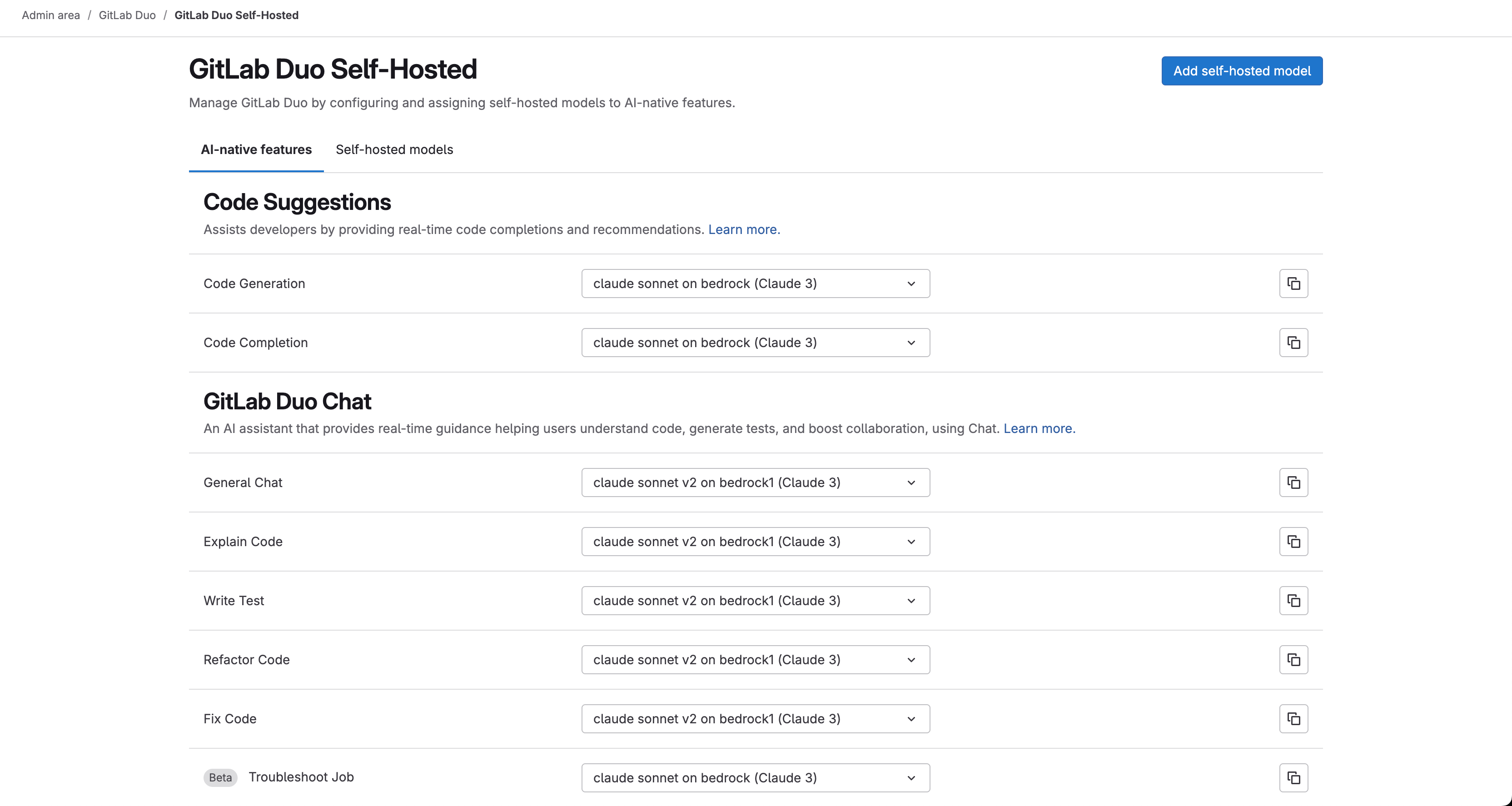

Using the self-hosted model to power AI features

To use the created self-hosted model to power AI-native features:

- On your GDK instance, go to

/admin/gitlab_duo/self_hosted. - For each AI feature you want to use with your self-hosted model (for example, Code Generation, Code Completion, General Chat, Explain Code, and so on), select your newly created self-hosted model (for example, Claude 3.5 Sonnet on Bedrock) from the corresponding dropdown list.

- Optional. To copy the configuration to all features under a specific category, select the copy icon next to it.

- After making your selections, the changes are usually saved automatically.

With this, you have successfully configured the self-hosted model to power AI-native features in your GitLab instance. To test the feature using, for example, Chat, open Chat and say Hello. You should see the response powered by your self-hosted model in the chat.

Moving a feature available in GitLab.com or GitLab Self-Managed to GitLab Duo Self-Hosted

To move a feature available in GitLab.com or GitLab Self-Managed to GitLab Duo Self-Hosted:

- Make the feature configurable to use a self-hosted model.

- Add prompts for the feature, for each model family you want to support.

Making the feature configurable to use a self-hosted model

When a feature is available in GitLab.com or GitLab Self-Managed, it should be configurable to use a self-hosted model. To make the feature configurable to use a self-hosted model:

- Add the feature's name to

ee/app/models/ai/feature_setting.rbas a stable feature or a beta/experimental feature. - Add the feature's name to the

ee/lib/gitlab/ai/feature_settings/feature_metadata.ymlfile, including the list of model families it supports. - Add the unit primitive to

config/services/self_hosted_models.ymlin thegitlab-cloud-connectorrepository. This merge request can be used as a reference. - Associated spec changes based on the above changes.

Please refer to the following merge requests for reference:

- Move Code Review Summary to Beta in Self-Hosted Duo

- Move Vulnerability Explanation to Beta in Self-Hosted Duo

Adding prompts for the feature

For each model family you want to support for the feature, you must add a prompt. Prompts are stored in the AI Gateway repository.

In most cases, the prompt that is used on GitLab.com is also used for Self-Hosted Duo.

Please refer to the following Merge Requests for reference:

Your feature should now be available in GitLab Duo Self-Hosted. Restart your GDK instance to apply the changes and test the feature.

Testing your feature

After implementing your AI feature, it's essential to validate its quality and effectiveness. This section outlines how to test your feature using the Centralized Evaluation Framework (CEF).

When designing your evaluation, you are responsible for selecting the most appropriate metric for your feature (for example, the percentage of responses rated 3 or 4 by an LLM judge) and setting a threshold that reflects acceptable performance. All evaluations use a standardized traffic-light scoring system:

- Green / Fully compatible: The results meet or exceed your defined threshold for the chosen metric.

- Yellow / Largely compatible: The results are close to the threshold but do not fully meet it.

- Red / Not compatible: The results fall significantly below the threshold.

The traffic-light system will automatically interpret your results according to these thresholds, providing a consistent classification across all AI features.

For example, to evaluate Duo Chat's performance on GitLab documentation questions, we use an LLM-based scoring system. Another language model rates each response on a 1-4 scale, and we calculate the percentage of responses that achieve scores of 3 or 4. This percentage serves as our benchmark metric for comparing the effectiveness of different LLMs, and the traffic-light system indicates the level of compatibility for each model based on the defined threshold.

The general process for testing your feature is as follows:

- Create a dataset: Prepare a representative dataset that covers the scenarios your feature is expected to handle.

- Define evaluation metrics and thresholds: Choose at least one metric that reflects your feature's goals and set a threshold for the traffic-light system.

- Automate the evaluation: Use the evaluation-runner to automate running your evaluation pipeline. Refer to the evaluation-runner README for setup instructions.

- Review and iterate: After running your evaluation, review the results. If the results are unsatisfactory, iterate on your prompts, dataset, or model configuration, and re-run the evaluation.

For more details, refer to the CEF documentation or this step-by-step guide.